Organizational Problems are Wicked Problems

A wicked problem is one that is just too complex and messy (comprising multiple problem-elements) to be easily defined. As it can’t be defined, it can’t be resolved using regular analysis methods, such as those used to generate IT system requirements. Different stakeholders will define the problem in different ways, depending on the parts they have encountered in their work. The emergence of multiple problem-definitions as the problem is explored distinguishes “wicked” problems from the “tame” problems that organizational analysts and IT systems developers typically deal with. While tame problems can be defined in terms of goals, rules, and relate to a clear scope of action, wicked problems consist of many, interrelated problems, each with its own organizational scope and goals. As a result, wicked problems have vague, emergent goals and boundaries. Ways of framing wicked problems are negotiated among stakeholders who hold radically different views of the organization (Rittel & Webber, 1973).

“It comes as no particular surprise to discover that a scientist formulates problems in a way which requires for their solution just those techniques in which he himself is especially skilled.”

Kaplan, Abraham (1964) “The Age of the Symbol—A Philosophy of Library Education” The Library Quarterly: Information, Community, Policy, Oct., 1964, Vol. 34, No. 4 (Oct., 1964), pp. 295-304

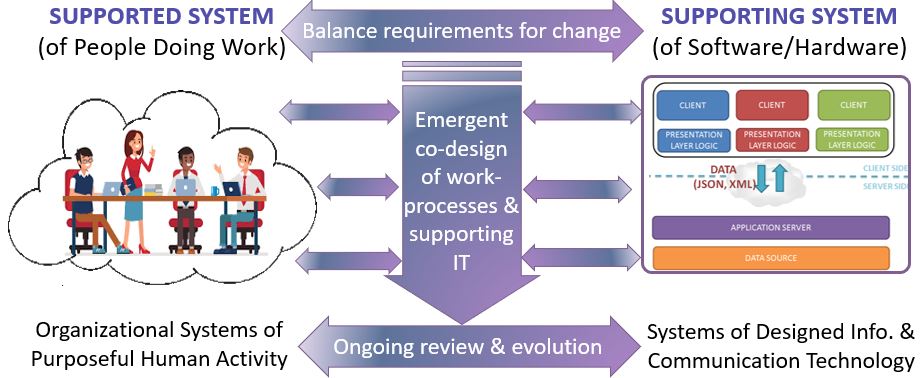

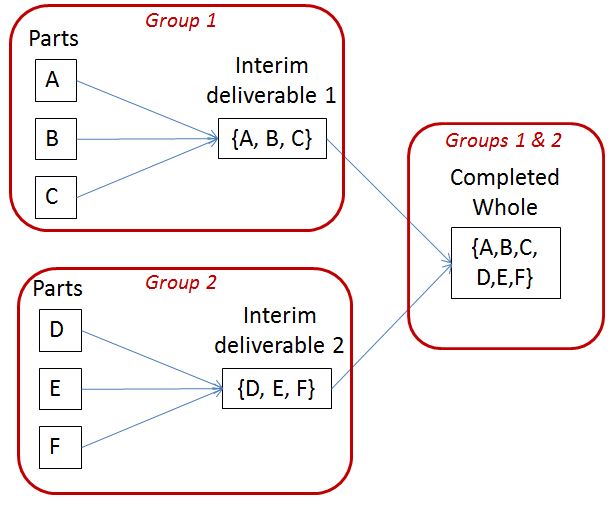

These types of problem are also known as systemic problems because we use systems thinking (a.k.a. systemic analysis) to resolve them. Systemic analysis methods use a “divide-and-conquer” approach to exploring problems. The sub-problems prioritized by various stakeholders are explored and debated across the wider group of change managers. Goals and potential solutions emerge as “the problem” is framed and re-framed in multiple ways over time, and across stakeholders. This process results in organizational learning, as stakeholders acquire an improved understanding of others’ perspectives across organizational functions and boundaries. Systemic analysis also allows change managers to explore the “knock-on” impacts of change, allowing them to appreciate conflicts and tradeoffs between perspectives and to predict the impact of changes to one area of the organization on other areas and functions.

What are Wicked Problems?

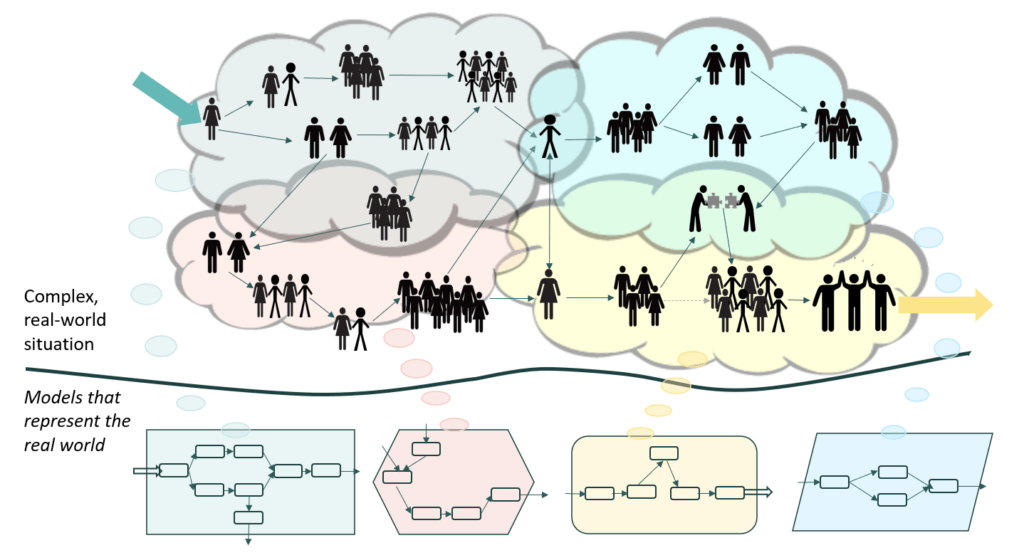

Wicked problems present as tangles of interrelated problems, or “messes” (Ackoff, 1974). Because these problems are so messy, they are defined by various stakeholders in multiple ways, depending on the parts that they perceive — which in turn depends on where they are in the organization, their experience and their disciplinary background.

If you try to model a complex problem-situation, you will rapidly discover that any “system” of work consists of subsystems, the definition and scope of which depends on where the definer stands in the organization. To act upon a wicked problem, you need to understand the multiplicity of perspectives that various stakeholders take. Often, a single person will hold multiple perspectives depending on the role they are playing at any point in time. For example, I trained as an engineer, I was introduced to systemic analysis during my education, and I adopted a social science perspective as an academic. So I can happily (and obliviously) define any situation in three different ways, depending on which “hat” I am wearing when I do so!

Wicked problems are so-called because they are not “well-structured” – that is, amenable to analytical methods of problem-solving. This means that analysts often experience difficulty in defining the problem that needs solving or selecting an appropriate technique to model the problem.

“Successful problem solving requires finding the right solution to the right problem. We fail more often because we solve the wrong problem than because we get the wrong solution to the right problem.”

Russel Ackoff (1974) Redesigning the Future. Wiley.

Wicked Problems Require Systemic Analysis

As a result, Wicked Problems have a number of characteristics not found in the sorts of problems for which professional analysts and change-agents are typically trained. They are solved by trial and error, rely more on problem-negotiation than analysis, and need to be investigated, rather than analyzed. Any analysis imposes a model or structure that includes some aspects of the situation and excludes others, imposing an expectation that the elements found will be related in specific ways:

“… it is tempting, if the only tool you have is a hammer, to treat everything as if it were a nail.”

Maslow, Abraham Harold (1966). The Psychology of Science: A Reconnaissance. Harper & Row.

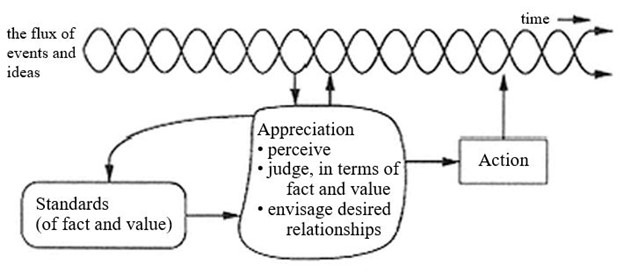

The bottom line is that, while most analysis approaches focus on the form of the solution, wicked problem analysis needs to investigate the nature and scope of the problem. Successful resolution of wicked problems requires appreciative design techniques (Vickers, 1968), where the definition of a solution emerges in tandem with the definition of the problem. Analysts must become enculturated in the problem-situation to understand the stakeholder perspectives that drive various definitions of wicked problems. They need to be familiar with systemic analysis of problems . Plus, they need to be good facilitators, capable of negotiating solutions across multiple stakeholders, with multiple viewpoints and priorities.

References

Mitroff, I.I., Kilmann, R.H. (2021). Wicked Messes: The Ultimate Challenge to Reality. In: The Psychodynamics of Enlightened Leadership. Management, Change, Strategy and Positive Leadership. Springer, Champaign. https://doi.org/10.1007/978-3-030-71764-3_3

Pickering, Andrew (1995) The Mangle of Practice. University of Chicago Press, Chicago IL.

Rittel, H. W. J. (1972). Second Generation Design Methods. Design Methods Group 5th Anniversary Report: 5-10. DMG Occasional Paper 1. Reprinted in N. Cross (Ed.) 1984. Developments in Design Methodology, J. Wiley & Sons, Chichester: 317-327.: Reprinted in N. Cross (ed.), Developments in Design Methodology, J. Wiley & Sons, Chichester, 1984, pp. 317-327.

Rittel, H. W. J. and M. M. Webber (1973). “Dilemmas in a General Theory of Planning.” Policy Sciences 4, pp. 155-169.

Vickers G. (1968) Value Systems and Social Process. Tavistock, London UK.